ChatGPT has been getting a lot of attention recently. I spent some time asking it questions about mental health and self-care. I thought its answers were impressive. Reading time ~ 3 minutes, 27 seconds.

There is a very good chance you’ve heard about ChatGPT. According to itself, it is “a language model developed by OpenAI. It is trained on a massive dataset of text, allowing it to generate human-like responses to a wide range of questions and prompts. The model uses advanced machine learning techniques, such as deep learning and transformer architecture, to generate its responses. ChatGPT has been designed to have a conversational tone, making it useful for applications such as chatbots, question-answering systems, and language generation.”

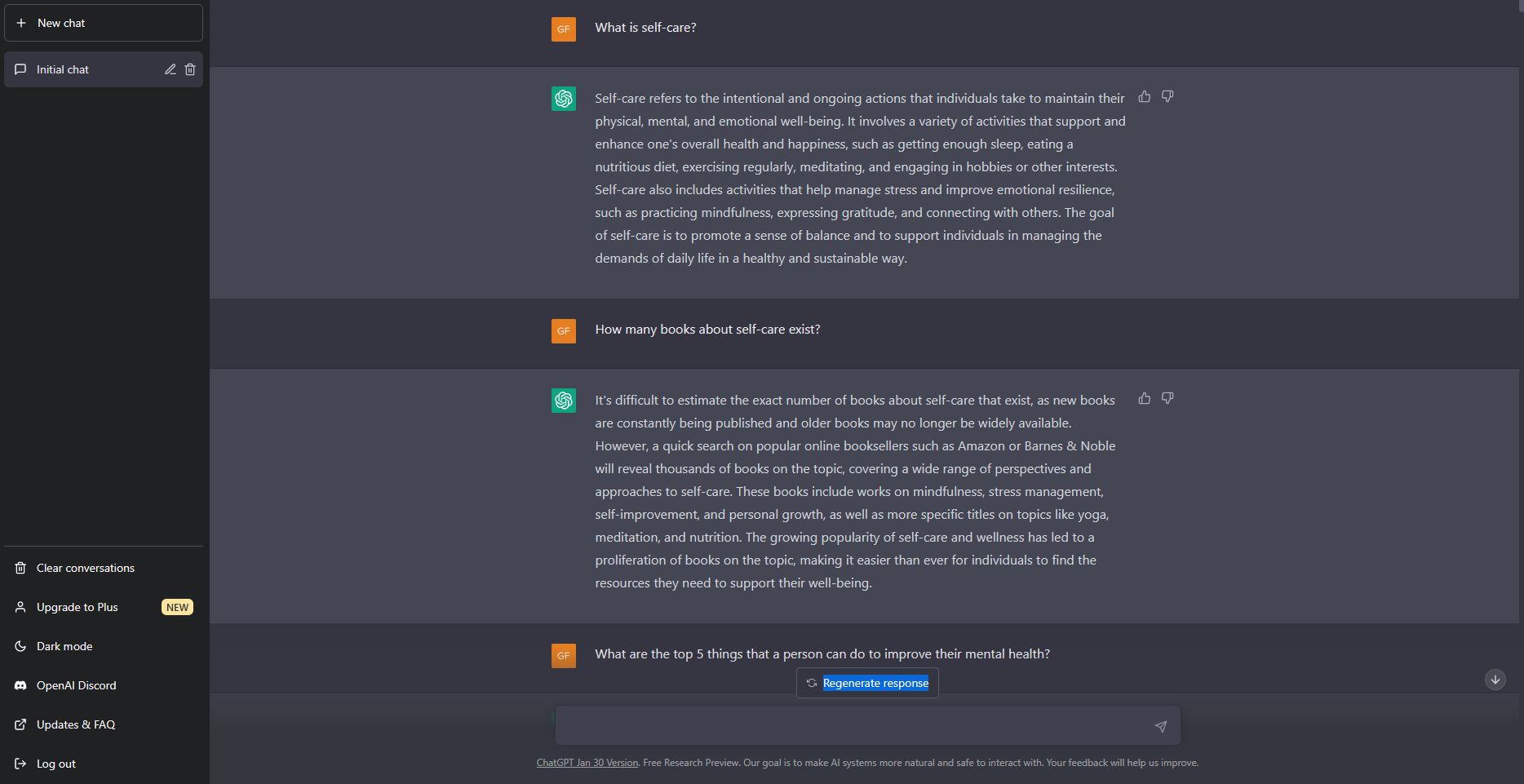

Much of the public discussion about ChatGPT has been about expressing concerns on how it could be used nefariously (e.g. pass tests, write essays etc). This weekend though, I spent some time with ChatGPT exploring how it might benefit people in terms of mental health and wellbeing (a core interest of my work).

My previous interactions with chatbots in the wellbeing space have been uninspiring. They could only answer very basic questions and prompts, and the content of their answers was limited.

My experience with ChatGPT has been different. I’ve thrown it a range of questions and requests over the past couple of days (I am still chatting with it).

- what is self-care?

- what are the top 5 things a person can do to improve their mental health?

- how do you make friends later in life?

- what are the current treatments for depression?

- what is a good questionnaire for assessing anxiety?

- is meditation or breathing techniques better to reduce stress?

- what are the best time management techniques?

- write me a 30 minute free weights workout

- what is important in starting a new habit?

Its answers have been concise, accurate (in relation to my question) and consistent with, and often better than, answers I would give to those same questions. And it answered them all in a manner of seconds. I have been really impressed and continue to enjoy asking it questions and setting it tasks.

Where it seemed most powerful was in its ability to create tools I could take away and use. For example, it designed me a 30-minute weights workout. It gave me a meditation script I could use in teaching. It came up with an example weekly schedule that included fun tasks. It gave me a bunch of journalling prompts to improve my mood. It provided me an overview for what a self-care class should contain. It was able to provide content that is genuinely useful in my personal and professional life.

There are some limitations of course. ChatGPT can’t reference where it has got the information from. When I asked it about where the information for managing a low mood came from it wrote “I’m sorry, but I do not have the ability to store and recall specific references for the information I provide. However, some of the information I provide about low moods and how to address them may be based on commonly accepted practices or general knowledge in the field of mental health and wellness. If you would like to research the topic further, I would suggest looking for information from reputable sources such as academic journals, government health agencies, or qualified healthcare professionals.” In response to previous questions I had asked it, it did say that it was trained on a range of materials including peer reviewed scientific publications, so there is definitely the possibility of using such systems to navigate complex literature. But for now, it can’t highlight specific references.

The other thing that I haven’t really tested is how good it is at just having a chat. How will it respond if instead of asking factual questions, I instead tell it about my day, how I am feeling etc? Early signs are that it shines at answering specific questions, but not at the subtleties of human interaction. Not surprising really. That is something that I think it will take a while for AI to replicate. It still outperformed many other counselling chatbots I have used, even those designed specifically to mimic conversation.

It doesn’t claim to be a therapist. When I asked it if it provided counselling it said “As a language model created by OpenAI, I am not a licensed mental health professional and cannot provide personal counseling or therapy. I can provide information and general advice on various topics, but it is important to seek help from a qualified professional for individualized and personalized care.”

Personally, I have an optimistic attitude about AI. I think it will help professionals better navigate the information landscape, which has become too large and cumbersome for any individual to navigate, even if they specialise. As a psychologist I can see programs like this being incredibly valuable in helping me quickly familiarise myself with core information in key areas. I think it will also be incredibly valuable in developing resources for clients.

And to be honest, I think the ‘train has left the station’ in terms of AI becoming a part of everyday life. Microsoft are already exploring how to integrate the technology into some of their products – including web search and graphic design.

There is a good chance you’ll hear more about systems like ChatGPT, especially as a student, as learning institutions try to work out if these systems are a threat or benefit to learning. For honest players, that is, those that use whatever tools are available to genuinely advance their knowledge and skill, these systems will be invaluable. As far as wellbeing goes, I think these could help put high quality health information in the hands of everyday people.

Have you had any interactions with ChatGPT? How did you find it?

[you might be interested to know that the freaky image on this post was created using AI – as part of Microsoft’s Designer program]